One of the most popular frameworks for designing curriculum today is Understanding by Design from Jay McTighe and Grant Wiggins. Their approach makes complete sense. You start by identifying the enduring understandings that you want students to construct, design assessments to let you know if you meet your goal or not, and then design the curriculum backward around the assessments. Iterate as necessary. The problem is that the quality of the curriculum developed using understanding by design is all over the map, with the vast majority of it being fairly awful.

This inconsistency can be partially explained by how understanding by design is typically implemented: through one-off workshops where the trainer or facilitator is often reluctant to push back too hard or give critical feedback. But implementation issues aren't the whole story. Many of the exemplars, including curriculum units highlighted by McTighe and Wiggins themselves, are often not that great either. The problem, as I see it, is that there is no way to know if the curriculum you are designing is any good when using understanding by design. The curriculum could be awesome; the curriculum could be terrible. There is no mechanism in understanding by design that lets you know either way... and our brains are very good at fooling us.

When I was working in Holliston, I worked with three 7th-grade science teachers over the summer to develop a chemistry unit. We started by identifying learning objectives and then designing an assessment. Designing the learning objectives went fairly smoothly; it felt like we were all on the same page. But designing the assessment was a struggle. I'd suggest an assessment, and the other three teachers would kind of exchange looks and then try to undermine what I had proposed. They were very respectful about it, but also very persistent. I was a little puzzled because they were very strong science teachers who were generally up for new ideas.

Finally, one of the teachers (Melissa) said to me, "Dave, our students can't do these assessments." This came at the end of two 8-hour days where we had been quietly butting heads. And she only said this to me because we had three more days left together and there was no sign that I was going to cave and accept an assessment that was nice but did not truly get at the heart of the learning objectives we had all agreed to. This ended up being a breakthrough moment for us because we went on to design a curriculum unit that was a huge success when we implemented it for the first time in the fall. (I am so proud of the work that we did together that the first product I developed after founding Vertical Learning Labs was an interactive textbook based on the unit called Chemistry from the Ground Up.)

Could we have achieved the same result using understanding by design? We could have used it, but it wouldn't have made the difference between an awesome unit and a good one. What made the difference was our commitment to a set of learning objectives and the realization that we could not reach those outcomes with our regular set of tools. I would not accept an assessment that did not actually measure if students reached our outcomes or not, and the teachers would not water down the outcomes. Understanding by design does not provide that kind of feedback. And once we realized that our regular tools were not good enough to do the job, we were freed to acquire and try new ones. It was like we could put all this baggage aside and engage in the task of designing a new chemistry unit with inquiry and not egos driving the process.

Something similar happened when I was in Groton-Dunstable. I was hired because the administration had been trying for years to get the math department at the middle school to adopt a standards-based math curriculum... without much success. I led a math program adoption committee of eight teachers, and we ended up deadlocked in June. I actually convinced all eight teachers to adopt a standards-based math program, but four of them wanted CMP 2 and four wanted Math Thematics. While below the surface the learning in both programs were similar, on the surface Math Thematics felt like a more traditional approach; it was more accessible to the teachers who were nervous about changing their practices.

Something that I've found at every middle school I've worked at is that middle school math teachers hate when students always ask the teacher to walk them through how to solve a problem instead of grappling with it for themselves. Tasked with breaking the deadlock before the school year ended, I asked this question: Which is more likely to get students thinking for themselves, a procedural approach or a conceptual approach? And as a follow up: How much are you prepared to risk to try to make that happen? The committee voted 7-0 with one abstention for CMP 2.

The teachers took a risk at Groton-Dunstable because they realized that they could either accept the status quo or try something different. For them, the potential gain outweighed the pain. There is an adage about a hammer and every problem looking like a nail. A corollary is that you won't learn or use new tools if you think that the hammer might be able to do the job. There are three things that are needed to create disruptive curriculum: (1) a good coach, (2) commitment to achieving something that feels impossible, and (3) accepting anything less than success as failure. Remember, try not. Do or do not. There is no try. Feedback is a bitch.

Friday, June 28, 2013

Saturday, June 22, 2013

Apple, Amazon, and Big Content

I've been following the e-books antitrust trial. From the beginning, I've been surprised that the DOJ has been alleging that Apple was the mastermind or ringleader of a conspiracy to raise e-book prices. I think that there is ample evidence that the major publishers worked together to raise e-book prices on bestsellers and used Apple's new iBookstore as leverage against Amazon. But saying that Apple orchestrated the whole thing and strong-armed the publishers seems like a massive stretch.

First, Apple's interest was in breaking Amazon's hold over the e-book market, not raising the prices of e-books. Second, what kind of leverage did Apple possibly hold over the publishers to force them to do anything? It seems much more likely that Apple saw that the publishers wanted to get out from under Amazon's thumb and came up with a mutually beneficial way for them to do it.

The funny thing is that this is exactly what Amazon did to enter the music business. The record companies were upset by Apple's pricing model: 99¢ for every song. The record companies wanted variable pricing. They wanted to be able to charge higher prices for the latest hits and popular songs. Apple refused. And iTunes was so dominant that the record companies couldn't do anything about it.

When Amazon decided to enter the music business, they knew that they needed to do something to compete against iTunes. They wanted the record companies to drop DRM. Now I'm sure that the record companies didn't want to drop DRM. They only agreed to sign on with iTunes in the first place because of Apple's FairPlay DRM technology. But an Amazon music store would give the record companies leverage against Apple, so they agreed. And once the Amazon music store opened with 99¢ tracks that were DRM-free, the record companies withheld the same DRM-free option from Apple until Apple agreed to introduce variable pricing and the price of hit songs rose to $1.29. (The price increase was bundled with higher quality encoding to soothe the consumer, but it was definitely a price increase.)

It seems like the case against the book publishers was pretty solid, which is why they all ended up settling with the DOJ. If the publishers had individually decided to sign on with Apple and then threatened to remove their books from Amazon unless Amazon agreed to change to the agency model, then I think they would have been fine. But by doing it in unison (and meeting secretly as a group to make sure that everyone was onboard), they behaved as a de facto monopoly. This is horizontal collusion.

I've read that, in order to prove vertical collusion against Apple (since Apple is not a publisher), the DOJ had to prove that Apple wasn't simply a member of the conspiracy but the instigator. I'm not sure if that is the case. In my opinion, the DOJ seems to have its blinders on and can't seem to see past the fact that the price of e-books rose because of what Apple did. Even the judge seemed blinded by that fact, although she seems to see more nuance as the trial unfolded. I'm curious to see what the outcome of the trial is.

First, Apple's interest was in breaking Amazon's hold over the e-book market, not raising the prices of e-books. Second, what kind of leverage did Apple possibly hold over the publishers to force them to do anything? It seems much more likely that Apple saw that the publishers wanted to get out from under Amazon's thumb and came up with a mutually beneficial way for them to do it.

The funny thing is that this is exactly what Amazon did to enter the music business. The record companies were upset by Apple's pricing model: 99¢ for every song. The record companies wanted variable pricing. They wanted to be able to charge higher prices for the latest hits and popular songs. Apple refused. And iTunes was so dominant that the record companies couldn't do anything about it.

When Amazon decided to enter the music business, they knew that they needed to do something to compete against iTunes. They wanted the record companies to drop DRM. Now I'm sure that the record companies didn't want to drop DRM. They only agreed to sign on with iTunes in the first place because of Apple's FairPlay DRM technology. But an Amazon music store would give the record companies leverage against Apple, so they agreed. And once the Amazon music store opened with 99¢ tracks that were DRM-free, the record companies withheld the same DRM-free option from Apple until Apple agreed to introduce variable pricing and the price of hit songs rose to $1.29. (The price increase was bundled with higher quality encoding to soothe the consumer, but it was definitely a price increase.)

It seems like the case against the book publishers was pretty solid, which is why they all ended up settling with the DOJ. If the publishers had individually decided to sign on with Apple and then threatened to remove their books from Amazon unless Amazon agreed to change to the agency model, then I think they would have been fine. But by doing it in unison (and meeting secretly as a group to make sure that everyone was onboard), they behaved as a de facto monopoly. This is horizontal collusion.

I've read that, in order to prove vertical collusion against Apple (since Apple is not a publisher), the DOJ had to prove that Apple wasn't simply a member of the conspiracy but the instigator. I'm not sure if that is the case. In my opinion, the DOJ seems to have its blinders on and can't seem to see past the fact that the price of e-books rose because of what Apple did. Even the judge seemed blinded by that fact, although she seems to see more nuance as the trial unfolded. I'm curious to see what the outcome of the trial is.

Thursday, June 20, 2013

Introduction to Game Design

On Monday I took a class on game design. The instructor was Seth Sivak, CEO of Proletariat. Seth's focus was on moment based design: designing systems, mechanics, and stories that allow for interesting, fun, moving, emotional, and challenging moments. At first I was a little skeptical. Moments aren't the first thing I think of when I think about gaming. But the more Seth talked about it, the more it made sense.

When designing a game, Seth begins by asking himself a series of questions:

I'm in the middle of designing an educational game and, after taking this class, I realized that there were key moments in the back of my head that I wanted players to experience. Thinking about those key moments is what made me excited about creating the game in the first place... I think those moments are powerful and I want others to experience them, too. The thought of designing the game around those moments to highlight them and make them pop had never occurred to me, but now it seemed obvious.

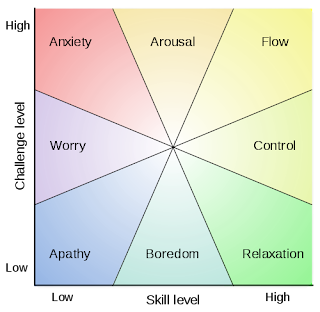

Seth also talked about experience architecture and interest curves, thinking about how well the sequence of events in a game will be able to hold the interest of the player. Flow is a term coined by Mihaly Csikszentmihalyi. It is the mental state of operation in which a person performing an activity is fully immersed in a feeling of energized focus, full involvement, and enjoyment in the process of the activity. I had read about flow as an educator, but I had never seen this particular diagram before:

Seth explained that your goal as a game designer is not to keep the player in the flow state the entire time, but to have them move from anxiety to arousal to flow to control to relaxation and then back again (hopefully avoiding worry, apathy, and boredom altogether).

I found the class both eye-opening and informative. Being immersed in a new discipline is fun and gives you new ways to think about things. Because I am an educator and not a game designer, I've been giving short shrift to the gaming and play side of my game. I tend to rely on the power of the learning experience to carry day and generate enthusiasm and engagement. But game design is another tool in my toolbox... one that I plan on putting to good use in the future.

When designing a game, Seth begins by asking himself a series of questions:

- What will the player feel?

- What is the actual moment that provides the emotional response?

- How will the player interact with the experience?

- Where/when does the experience take place?

- What role does the player have in the experience?

- Why would the player want to be part of the experience?

I'm in the middle of designing an educational game and, after taking this class, I realized that there were key moments in the back of my head that I wanted players to experience. Thinking about those key moments is what made me excited about creating the game in the first place... I think those moments are powerful and I want others to experience them, too. The thought of designing the game around those moments to highlight them and make them pop had never occurred to me, but now it seemed obvious.

Seth also talked about experience architecture and interest curves, thinking about how well the sequence of events in a game will be able to hold the interest of the player. Flow is a term coined by Mihaly Csikszentmihalyi. It is the mental state of operation in which a person performing an activity is fully immersed in a feeling of energized focus, full involvement, and enjoyment in the process of the activity. I had read about flow as an educator, but I had never seen this particular diagram before:

Seth explained that your goal as a game designer is not to keep the player in the flow state the entire time, but to have them move from anxiety to arousal to flow to control to relaxation and then back again (hopefully avoiding worry, apathy, and boredom altogether).

I found the class both eye-opening and informative. Being immersed in a new discipline is fun and gives you new ways to think about things. Because I am an educator and not a game designer, I've been giving short shrift to the gaming and play side of my game. I tend to rely on the power of the learning experience to carry day and generate enthusiasm and engagement. But game design is another tool in my toolbox... one that I plan on putting to good use in the future.

Monday, June 17, 2013

And the iSheep Shall Inherit the Earth

I bought my first mac in 1986, and I've used Apple products ever since. I currently own a 13" Macbook Pro, an iPhone 4S, and an iPad 2. I guess that you could call me an iSheep.

iSheep is a derogatory term for someone who has been brainwashed into joining the Apple cult or is so image-conscious (yet sadly out of touch) that they must own "teh shiny." iSheep buy whatever Apple is selling, regardless if they need it or it is any good.

An example of iSheep market power is the adoption of the USB standard by the computer industry. Released in 1996, USB initially struggled to gain traction. Computer makers were reluctant to build USB ports into their computers because there were barely any compatible peripherals. Peripheral makers were reluctant to build USB into their devices because there were barely any computers with USB built-in. Steve Jobs changed all that in 1998 with the introduction of the iMac. The iMac did away with all other legacy ports, forcing users to buy all new peripherals using USB. The iSheep lapped it up... and the USB market boomed.

Another example is iMovie. Apple started bundling iMovie with iMacs in 1999... and home movies with cheesy iMovie effects boomed. Microsoft countered by bundling Windows Movie Maker with Windows XP in 2001, but iSheep continued to bang on about how it was so much easier to make digital movies on a mac. iMovie became synonymous with consumer video editing software in the same way that Kleenex became synonymous with facial tissues. I've never seen data on this, but I'd love to know the percentage of iMac owners who used iMovie compared to the percentage of PC owners that used Movie Maker.

Having iSheep around is handy if you happen to use Apple products. A couple of weeks ago, Chitika released a study stating that 93% of active iPhone users were using iOS 6. This is great because it encourages app developers to take advantage of the latest APIs. The percentage of Android users on Jelly Bean is much lower. This is only partly due to the fact that Apple is responsible for pushing iOS updates to users while carriers and OEMs are responsible for pushing Android updates to most users. Apple has also done any amazing job of training iSheep to push the update button when it pops up. And buy things through iTunes.

When tech writers and analysts discuss the app stores for Windows Phone and BB10, they invariably talk about the twin challenges of convincing app developers to develop for a platform with few users and convincing users to invest in a platform with few apps. But what would happen if, instead of announcing iOS 7 at WWDC a few days ago, Apple had announced an entirely new mobile OS for phones and tablets that would be incompatible with iOS apps? Would developers wait for the users, or would developers pile in, confident that the iSheep will be there? For sheep, that's one powerful market demographic.

iSheep is a derogatory term for someone who has been brainwashed into joining the Apple cult or is so image-conscious (yet sadly out of touch) that they must own "teh shiny." iSheep buy whatever Apple is selling, regardless if they need it or it is any good.

An example of iSheep market power is the adoption of the USB standard by the computer industry. Released in 1996, USB initially struggled to gain traction. Computer makers were reluctant to build USB ports into their computers because there were barely any compatible peripherals. Peripheral makers were reluctant to build USB into their devices because there were barely any computers with USB built-in. Steve Jobs changed all that in 1998 with the introduction of the iMac. The iMac did away with all other legacy ports, forcing users to buy all new peripherals using USB. The iSheep lapped it up... and the USB market boomed.

Another example is iMovie. Apple started bundling iMovie with iMacs in 1999... and home movies with cheesy iMovie effects boomed. Microsoft countered by bundling Windows Movie Maker with Windows XP in 2001, but iSheep continued to bang on about how it was so much easier to make digital movies on a mac. iMovie became synonymous with consumer video editing software in the same way that Kleenex became synonymous with facial tissues. I've never seen data on this, but I'd love to know the percentage of iMac owners who used iMovie compared to the percentage of PC owners that used Movie Maker.

Having iSheep around is handy if you happen to use Apple products. A couple of weeks ago, Chitika released a study stating that 93% of active iPhone users were using iOS 6. This is great because it encourages app developers to take advantage of the latest APIs. The percentage of Android users on Jelly Bean is much lower. This is only partly due to the fact that Apple is responsible for pushing iOS updates to users while carriers and OEMs are responsible for pushing Android updates to most users. Apple has also done any amazing job of training iSheep to push the update button when it pops up. And buy things through iTunes.

When tech writers and analysts discuss the app stores for Windows Phone and BB10, they invariably talk about the twin challenges of convincing app developers to develop for a platform with few users and convincing users to invest in a platform with few apps. But what would happen if, instead of announcing iOS 7 at WWDC a few days ago, Apple had announced an entirely new mobile OS for phones and tablets that would be incompatible with iOS apps? Would developers wait for the users, or would developers pile in, confident that the iSheep will be there? For sheep, that's one powerful market demographic.

Wednesday, June 12, 2013

Yoda and Theories of Action

I learned about theories of action when I attended the Harvard Institute for School Leadership a few summers ago. The idea is simple: establish a cause-and-effect relationship between your actions and your goals. If I do X, then Y will happen. If I do X and Y doesn't happen, then my theory of action doesn't work and I need to revise it. Consciously identifying your theory of action creates a feedback mechanism that can help you hone your actions, moving you closer to your goal.

Many people have the stated goal of improving education on a wide-scale. They want to change the system. Yet they repeatedly do the same things over and over again without much effect. When things don't work out, they identify dozens of intervening actions by other actors that prevented them from achieving their goal. They don't learn from their failure because they don't perceive their actions as failing. They never really believed that if they did X, then Y would happen. They believed that if they did X, then Y would happen if a bunch of other people did a bunch of other things at the same time.

In The Empire Strikes Back, Yoda tells Luke Skywalker, "Try not. Do or do not. There is no try." At the time I remember thinking, "Harsh toke, dude." (Or words to that effect... I was only eleven at the time.) But if there is no causal relationship between your actions and your goals, how do you evaluate whether you are taking the correct actions or not? Trying to improve education on a wide-scale is a massively complex undertaking, and it is the height of hubris to believe that you are going to stumble on an effective theory of action on your first try. Trying is not enough. And it is difficult to learn without the feedback that comes from failure.

I see so many ed reformers scattered across the landscape, each fighting the good fight and trying to hold their own little piece of the battlefield, waiting for the calvary to come charging over the hill. Except it never does. Maybe it is time for us to try some new theories.

Many people have the stated goal of improving education on a wide-scale. They want to change the system. Yet they repeatedly do the same things over and over again without much effect. When things don't work out, they identify dozens of intervening actions by other actors that prevented them from achieving their goal. They don't learn from their failure because they don't perceive their actions as failing. They never really believed that if they did X, then Y would happen. They believed that if they did X, then Y would happen if a bunch of other people did a bunch of other things at the same time.

In The Empire Strikes Back, Yoda tells Luke Skywalker, "Try not. Do or do not. There is no try." At the time I remember thinking, "Harsh toke, dude." (Or words to that effect... I was only eleven at the time.) But if there is no causal relationship between your actions and your goals, how do you evaluate whether you are taking the correct actions or not? Trying to improve education on a wide-scale is a massively complex undertaking, and it is the height of hubris to believe that you are going to stumble on an effective theory of action on your first try. Trying is not enough. And it is difficult to learn without the feedback that comes from failure.

I see so many ed reformers scattered across the landscape, each fighting the good fight and trying to hold their own little piece of the battlefield, waiting for the calvary to come charging over the hill. Except it never does. Maybe it is time for us to try some new theories.

Tuesday, June 11, 2013

Evaporation and Condensation

So now I am utterly confused. Do evaporation and condensation occur at the same time, or is it one or the other but never both? I thought that I knew the answer to this question and I was prepared to rant a little bit about the state of science education and how we are doing a disservice to kids by not raising this central question (and many others like it), but now I feel the need to take a step back first.

What is evaporation? It is the transfer of particles from the liquid state to the gas state from the surface of the liquid. Okay. Seems clear enough and there is some level of consensus around this definition. And condensation? It is the transfer of particles from the gas state to the liquid state. So far, so good.

However, the problem with these definitions is that they do nothing to help me answer the question: Do evaporation and condensation occur at the same time, or is it one or the other but never both? There is too much ambiguity.

Here is what I need to know to clear up my confusion: Is evaporation (1) the transfer of a particle from the liquid state to the gas state from the surface of the liquid, or (2) the net transfer of particles from the liquid state to the gas state from the surface of the liquid?

The first definition focuses on a single particle. If one particle breaks away from the surface of the liquid state and enters the gas state, then evaporation occurred. The second definition focuses on the system as a whole. Evaporation only occurs if more particles are breaking away from the surface of the liquid state and entering the gas state than are leaving the gas state and entering the liquid state.

Let's consider a glass of water on a warm day. Water molecules are breaking away from the surface of the liquid and entering the gas state. Water molecules in the air are leaving the gas state and entering the liquid state. Because there are more water molecules entering the gas state than the liquid state, the amount of water in the glass is decreasing over time.

Now let's drop a bunch of ice cubes into the glass of water. Water molecules are still breaking away from the surface of the liquid and entering the gas state. Water molecules in the air are still leaving the gas state and entering the liquid state. However, the addition of the ice cubes has shifted the rates of these two parallel processes, and now more water molecules are entering the liquid state than the gas state, and the amount of water in the glass is increasing over time. (Droplets of liquid water are forming on the outside of the glass, but condensation is happening in the glass as well. It's simply easier to point out these visible droplets of water as evidence of condensation than measure the amount of liquid water in the glass.)

I don't particularly care how we define evaporation and condensation (okay, I do), but I do want kids and adults to understand evaporation and condensation at this level. We are teaching kids about evaporation and condensation, and they are building mental models about how the world works on an atomic and molecular level; yet, we aren't probing to help us or our students see if those mental models are accurate or robust. And what about the kids themselves? Why aren't they probing their own mental models? Why isn't it nagging at them that they don't really understand what is going on? Is it because they don't care, don't expect clarity, or don't expect their teachers to be able to provide clarity?

Well, I do expect clarity for myself. This is something that I expect to understand, and I think that I do on an atomic and molecular level. Where I feel myself on shaky ground is the actual definitions of evaporation and condensation.

Here is what one of my college physics textbooks says on the matter: "Air normally contains water vapor (water in the gas phase) and it comes mainly from evaporation. To look at this process in a little more detail, consider a closed container that is partially filled with water (it could just as well be any other liquid) and from which the air has been removed. The fastest moving molecules quickly evaporate into the space above. As they move about, some of these molecules strike the liquid surface and again become part of the liquid phase; this is called condensation." The implication is that evaporation and condensation occur on a per particle basis (definition 1).

Now let's take a look at Wikipedia: "Evaporation is a type of vaporization of a liquid that occurs from the surface of a liquid into a gaseous phase that is not saturated with the evaporating substance." The implication is that evaporation ceases when the gaseous phase is saturated and the system has reached equilibrium (definition 2).

And here is what I turned up when I googled for a scientific definition of evaporation:

"The change of a liquid into a vapor at a temperature below the boiling point. Evaporation takes place at the surface of a liquid, where molecules with the highest kinetic energy are able to escape. When this happens, the average kinetic energy of the liquid is lowered, and its temperature decreases."

"The process by which molecules undergo the spontaneous transition from the liquid phase to the gas phase. Evaporation is the opposite of condensation."

"It is the process whereby atoms or molecules in a liquid state (or solid state if the substance sublimes) gain sufficient energy to enter the gaseous state."

"Evaporation occurs when a liquid changes to a gas at a temperature below its normal boiling point."

"Evaporation is the slow vaporization of a liquid and the reverse of condensation. A type of phase transition, it is process by which molecules in a liquid state spontaneously become gaseous."

So is it definition 1 or definition 2? Has the scientific community reached consensus? If some of you can help me out, please weigh in with your comments.

What is evaporation? It is the transfer of particles from the liquid state to the gas state from the surface of the liquid. Okay. Seems clear enough and there is some level of consensus around this definition. And condensation? It is the transfer of particles from the gas state to the liquid state. So far, so good.

However, the problem with these definitions is that they do nothing to help me answer the question: Do evaporation and condensation occur at the same time, or is it one or the other but never both? There is too much ambiguity.

Here is what I need to know to clear up my confusion: Is evaporation (1) the transfer of a particle from the liquid state to the gas state from the surface of the liquid, or (2) the net transfer of particles from the liquid state to the gas state from the surface of the liquid?

The first definition focuses on a single particle. If one particle breaks away from the surface of the liquid state and enters the gas state, then evaporation occurred. The second definition focuses on the system as a whole. Evaporation only occurs if more particles are breaking away from the surface of the liquid state and entering the gas state than are leaving the gas state and entering the liquid state.

Let's consider a glass of water on a warm day. Water molecules are breaking away from the surface of the liquid and entering the gas state. Water molecules in the air are leaving the gas state and entering the liquid state. Because there are more water molecules entering the gas state than the liquid state, the amount of water in the glass is decreasing over time.

Now let's drop a bunch of ice cubes into the glass of water. Water molecules are still breaking away from the surface of the liquid and entering the gas state. Water molecules in the air are still leaving the gas state and entering the liquid state. However, the addition of the ice cubes has shifted the rates of these two parallel processes, and now more water molecules are entering the liquid state than the gas state, and the amount of water in the glass is increasing over time. (Droplets of liquid water are forming on the outside of the glass, but condensation is happening in the glass as well. It's simply easier to point out these visible droplets of water as evidence of condensation than measure the amount of liquid water in the glass.)

I don't particularly care how we define evaporation and condensation (okay, I do), but I do want kids and adults to understand evaporation and condensation at this level. We are teaching kids about evaporation and condensation, and they are building mental models about how the world works on an atomic and molecular level; yet, we aren't probing to help us or our students see if those mental models are accurate or robust. And what about the kids themselves? Why aren't they probing their own mental models? Why isn't it nagging at them that they don't really understand what is going on? Is it because they don't care, don't expect clarity, or don't expect their teachers to be able to provide clarity?

Well, I do expect clarity for myself. This is something that I expect to understand, and I think that I do on an atomic and molecular level. Where I feel myself on shaky ground is the actual definitions of evaporation and condensation.

Here is what one of my college physics textbooks says on the matter: "Air normally contains water vapor (water in the gas phase) and it comes mainly from evaporation. To look at this process in a little more detail, consider a closed container that is partially filled with water (it could just as well be any other liquid) and from which the air has been removed. The fastest moving molecules quickly evaporate into the space above. As they move about, some of these molecules strike the liquid surface and again become part of the liquid phase; this is called condensation." The implication is that evaporation and condensation occur on a per particle basis (definition 1).

Now let's take a look at Wikipedia: "Evaporation is a type of vaporization of a liquid that occurs from the surface of a liquid into a gaseous phase that is not saturated with the evaporating substance." The implication is that evaporation ceases when the gaseous phase is saturated and the system has reached equilibrium (definition 2).

And here is what I turned up when I googled for a scientific definition of evaporation:

"The change of a liquid into a vapor at a temperature below the boiling point. Evaporation takes place at the surface of a liquid, where molecules with the highest kinetic energy are able to escape. When this happens, the average kinetic energy of the liquid is lowered, and its temperature decreases."

"The process by which molecules undergo the spontaneous transition from the liquid phase to the gas phase. Evaporation is the opposite of condensation."

"It is the process whereby atoms or molecules in a liquid state (or solid state if the substance sublimes) gain sufficient energy to enter the gaseous state."

"Evaporation occurs when a liquid changes to a gas at a temperature below its normal boiling point."

"Evaporation is the slow vaporization of a liquid and the reverse of condensation. A type of phase transition, it is process by which molecules in a liquid state spontaneously become gaseous."

So is it definition 1 or definition 2? Has the scientific community reached consensus? If some of you can help me out, please weigh in with your comments.

Monday, June 10, 2013

The Surface of No Compromises

I'm always perplexed when I read comments on tech sites that go something like this: "How can you complain about feature X? Isn't it better than not having feature X?" Many of these commenters work in STEM fields and claim to know something about the engineering design process; yet somehow they believe that the inclusion of one feature has no impact on any of the other features.

One recent example of this is the Microsoft Surface. Steven Sinofsky famously described Windows 8 as "no compromise," and many Surface fans have taken to describing the device the same way: as a tablet and a laptop with no compromise.

This no compromise talk is suppose to mean that the Surface is just as good a tablet as a pure tablet, and just as good a laptop as a pure laptop. But that doesn't seem to be the case. Let's start with the Surface Pro. As a laptop, it has the specs and price of an ultrabook, but there are certainly compromises in the form factor. Because of the design decisions that Microsoft made to produce a tablet/laptop hybrid, the keyboard isn't as good, the screen has a fixed viewing angle, and the Surface does not have a flat bottom. As a tablet, it is heavy and has a short battery life.

Some people counter that, if you don't like using the Surface Pro as a laptop when curled up on the couch, then just use it as a tablet. But I use Microsoft Office on my laptop on my couch all the time, and using the desktop version of Office on a tablet with an onscreen keyboard doesn't seem like a good solution; I'd much rather use a tablet-friendly and touch-optimized version of Office. And sure, the Surface Pro is far more powerful than almost every other tablet out there, but what good is all that power if there aren't any tablet apps that take advantage of it?

As for Surface RT, it is a perfectly good tablet, but it doesn't have the rich selection of tablet apps that the iPad or Nexus 7 have. And as a laptop, it is underpowered. It's more like a netbook than a no compromise laptop, and it only runs a handful of pre-loaded desktop apps.

I think it is interesting to consider the different approaches that Microsoft and Apple took with their operating systems. In Windows 8, Microsoft made as much of their OS tablet- and touch-friendly as possible. Anything that they couldn't get to in time or figure out how to make work better, they left in, simply dumping the user back to the desktop. In iOS, Apple made as much of their OS tablet- and touch-friendly as possible. Anything that they couldn't get to in time or figure out how to make work acceptably, they took out.

The Surface seems to have three addressable markets: (1) those looking to use the Surface Pro as their primary machine, (2) those looking for a laptop as a companion device, and (3) those looking for a tablet as a companion device.

My laptop is my primary machine, and I don't think that I could live with anything smaller than a 13" screen. In fact, I would prefer a 15" screen, but I'm willing to trade a little screen real estate for portability. I also want at least 256 GB of storage. My 2010 laptop only has a 128 GB SSD, but I'm bumping up against that constraint all the time; I have to use an external HD for video work and I'm constantly looking for files and apps I can delete to free up more space. My next laptop is going to have more storage. I feel like the only people who are going to be interested in the Surface Pro are those who travel a lot and are already in the market an 11" ultrabook, and I'm not sure how large that market is.

People who use a desktop computer as their primary machine might consider a Surface for when they are on the go. The Surface Pro is kind of expensive as a companion device, but if you can afford it, it is certainly nice. The Surface RT could have the same appeal that the netbook had, but the price is much higher. If you believe that the netbook craze was caused by the portable form factor and not the low price, then this could be a large market. But if the primary appeal of a netbook was its sub-$300 price point, then Surface RT is simply too expensive. It also may not run all of the desktop software that people may want. The rumor was that there was a high return rate on netbooks shipping with Linux for this reason.

Finally, there are people shopping for a tablet. Surface RT is more expensive than Android tablets and has fewer tablet apps than Android tablets or iPads. The one thing going for it is Microsoft Office. If that is a killer app for your tablet, then the Surface RT might be the tablet for you. I'm not sure if that many people want to use Office on their tablets, especially if it means giving up other things. I guess we'll have to see, especially when more tablet apps are released on the Windows Store.

I think that Microsoft did some nice work on the Surface and that the tablet/laptop hybrid might have a future. If a hybrid ever got to be 13" and under 1.5 pounds without the keyboard, then I could definitely see the appeal. But even under those conditions, there would still be compromises. There always are.

One recent example of this is the Microsoft Surface. Steven Sinofsky famously described Windows 8 as "no compromise," and many Surface fans have taken to describing the device the same way: as a tablet and a laptop with no compromise.

This no compromise talk is suppose to mean that the Surface is just as good a tablet as a pure tablet, and just as good a laptop as a pure laptop. But that doesn't seem to be the case. Let's start with the Surface Pro. As a laptop, it has the specs and price of an ultrabook, but there are certainly compromises in the form factor. Because of the design decisions that Microsoft made to produce a tablet/laptop hybrid, the keyboard isn't as good, the screen has a fixed viewing angle, and the Surface does not have a flat bottom. As a tablet, it is heavy and has a short battery life.

Some people counter that, if you don't like using the Surface Pro as a laptop when curled up on the couch, then just use it as a tablet. But I use Microsoft Office on my laptop on my couch all the time, and using the desktop version of Office on a tablet with an onscreen keyboard doesn't seem like a good solution; I'd much rather use a tablet-friendly and touch-optimized version of Office. And sure, the Surface Pro is far more powerful than almost every other tablet out there, but what good is all that power if there aren't any tablet apps that take advantage of it?

As for Surface RT, it is a perfectly good tablet, but it doesn't have the rich selection of tablet apps that the iPad or Nexus 7 have. And as a laptop, it is underpowered. It's more like a netbook than a no compromise laptop, and it only runs a handful of pre-loaded desktop apps.

I think it is interesting to consider the different approaches that Microsoft and Apple took with their operating systems. In Windows 8, Microsoft made as much of their OS tablet- and touch-friendly as possible. Anything that they couldn't get to in time or figure out how to make work better, they left in, simply dumping the user back to the desktop. In iOS, Apple made as much of their OS tablet- and touch-friendly as possible. Anything that they couldn't get to in time or figure out how to make work acceptably, they took out.

The Surface seems to have three addressable markets: (1) those looking to use the Surface Pro as their primary machine, (2) those looking for a laptop as a companion device, and (3) those looking for a tablet as a companion device.

My laptop is my primary machine, and I don't think that I could live with anything smaller than a 13" screen. In fact, I would prefer a 15" screen, but I'm willing to trade a little screen real estate for portability. I also want at least 256 GB of storage. My 2010 laptop only has a 128 GB SSD, but I'm bumping up against that constraint all the time; I have to use an external HD for video work and I'm constantly looking for files and apps I can delete to free up more space. My next laptop is going to have more storage. I feel like the only people who are going to be interested in the Surface Pro are those who travel a lot and are already in the market an 11" ultrabook, and I'm not sure how large that market is.

People who use a desktop computer as their primary machine might consider a Surface for when they are on the go. The Surface Pro is kind of expensive as a companion device, but if you can afford it, it is certainly nice. The Surface RT could have the same appeal that the netbook had, but the price is much higher. If you believe that the netbook craze was caused by the portable form factor and not the low price, then this could be a large market. But if the primary appeal of a netbook was its sub-$300 price point, then Surface RT is simply too expensive. It also may not run all of the desktop software that people may want. The rumor was that there was a high return rate on netbooks shipping with Linux for this reason.

Finally, there are people shopping for a tablet. Surface RT is more expensive than Android tablets and has fewer tablet apps than Android tablets or iPads. The one thing going for it is Microsoft Office. If that is a killer app for your tablet, then the Surface RT might be the tablet for you. I'm not sure if that many people want to use Office on their tablets, especially if it means giving up other things. I guess we'll have to see, especially when more tablet apps are released on the Windows Store.

I think that Microsoft did some nice work on the Surface and that the tablet/laptop hybrid might have a future. If a hybrid ever got to be 13" and under 1.5 pounds without the keyboard, then I could definitely see the appeal. But even under those conditions, there would still be compromises. There always are.

Friday, June 7, 2013

Red and Yellow Chips

A common way to teach students how to add and subtract integers is to use zero pairs. If negative integers are represented by red chips and positive integers by yellow chips, then one red chip and one yellow chip is a zero pair: they have a sum of zero. Since a zero pair has a sum of zero, students learn that they can add or remove zero pairs from a pile of chips without changing the value of the pile, and that this can make the addition and subtraction of integers easier.

Using red and yellow chips and zero pairs arose from the desire to help students develop mental models for adding and subtracting integers. Mental models are theories that we generate to help us explain our experiences and the world around us. As Eric Mazur, Professor of Physics at Harvard, pointed out during the panel discussion I attended on Tuesday, knowledge transfer is only the first stage of learning; after that, the individual still has to make his or her own meaning out of that knowledge.

Unfortunately, the model used to represent integers (red and yellow chips) has been conflated with the mental models that students develop in their heads. Just because I use red and yellow chips to model integer addition and subtraction does not mean that the mental model that I construct for myself has anything to do with red and yellow chips.

Mental models are not transferred, they are constructed. The reason why we model integer addition and subtraction with red and yellow chips is not to give students a mental model, it is to provide them with a set of experiences that we hope will lead them to construct an effective mental model for themselves. As teachers, we have no way of knowing what that mental model might look like. All we can do is place students in situations where they can apply and test the mental model they are developing, and give them feedback they can use for revision and extension.

I'm not a fan of the red and yellow chips. First, using red and yellow chips doesn't really build on any existing mental models or experiences that most students have. How often have students encountered and interacted with zero pairs in real-life? Second, I don't think that most mathematicians use zero pairs when thinking about integer addition and subtraction. We should always be wary when we expect beginners to think one way while experts think another way. A beginner's thinking may be more primitive or simplistic, but in general, it should not be different.

I prefer an approach for learning about integer addition and subtraction that I developed during the Florida recount in the 2000 presidential election. I've since changed the scenario to a battle of the bands held at a school gymnasium.

Imagine that there are two popular bands playing, one led by Sue Negative and the other by Joe Positive. You are manning the door. At the start of the event, there are an equal number of Negative and Positive fans. You don't know how many fans of each there are, just that the numbers are equal. Throughout the event, fans enter and leave. Your job is to keep track of which band has more fans in the gymnasium.

I prefer this scenario because it does build on existing mental models. My nieces and nephews watch each other like hawks and always have a running tally in their heads of who has gotten more treats over the course of a party or family outing. It also uses integers in a scenario where integers represent vectors. As you man the door, you are tracking changes to quantities and relative quantities, not the actual quantities themselves. This is how we encounter integers most often in real-life, and I believe that this is how most mathematicians think about positive and negative numbers. Contrast this with the red and yellow chip scenario where integers represent scalar quantities.

But the scenario is not the mental model; the scenario simply provides concrete experiences that students use to develop their own mental model. The teacher should help students probe those mental models as they are being developed to uncover misconceptions and increase flexibility and robustness. If given a sequence of entrances and exits, how does a student figure out which band has more fans in the gymnasium? Does she always process sequentially or has she realized that it is more efficient to group the movement of the Negative fans and the Positive fans separately and then combine them in the end? Does another student recognize that, if all you care about is which band is ahead in terms of fans, three Negative fans entering the gymnasium has the same effect as three Positive fans leaving the gymnasium?

Thinking about how to guide students as they construct mental models is a big part of what we do at Vertical Learning Labs.

Using red and yellow chips and zero pairs arose from the desire to help students develop mental models for adding and subtracting integers. Mental models are theories that we generate to help us explain our experiences and the world around us. As Eric Mazur, Professor of Physics at Harvard, pointed out during the panel discussion I attended on Tuesday, knowledge transfer is only the first stage of learning; after that, the individual still has to make his or her own meaning out of that knowledge.

Unfortunately, the model used to represent integers (red and yellow chips) has been conflated with the mental models that students develop in their heads. Just because I use red and yellow chips to model integer addition and subtraction does not mean that the mental model that I construct for myself has anything to do with red and yellow chips.

Mental models are not transferred, they are constructed. The reason why we model integer addition and subtraction with red and yellow chips is not to give students a mental model, it is to provide them with a set of experiences that we hope will lead them to construct an effective mental model for themselves. As teachers, we have no way of knowing what that mental model might look like. All we can do is place students in situations where they can apply and test the mental model they are developing, and give them feedback they can use for revision and extension.

I'm not a fan of the red and yellow chips. First, using red and yellow chips doesn't really build on any existing mental models or experiences that most students have. How often have students encountered and interacted with zero pairs in real-life? Second, I don't think that most mathematicians use zero pairs when thinking about integer addition and subtraction. We should always be wary when we expect beginners to think one way while experts think another way. A beginner's thinking may be more primitive or simplistic, but in general, it should not be different.

I prefer an approach for learning about integer addition and subtraction that I developed during the Florida recount in the 2000 presidential election. I've since changed the scenario to a battle of the bands held at a school gymnasium.

Imagine that there are two popular bands playing, one led by Sue Negative and the other by Joe Positive. You are manning the door. At the start of the event, there are an equal number of Negative and Positive fans. You don't know how many fans of each there are, just that the numbers are equal. Throughout the event, fans enter and leave. Your job is to keep track of which band has more fans in the gymnasium.

I prefer this scenario because it does build on existing mental models. My nieces and nephews watch each other like hawks and always have a running tally in their heads of who has gotten more treats over the course of a party or family outing. It also uses integers in a scenario where integers represent vectors. As you man the door, you are tracking changes to quantities and relative quantities, not the actual quantities themselves. This is how we encounter integers most often in real-life, and I believe that this is how most mathematicians think about positive and negative numbers. Contrast this with the red and yellow chip scenario where integers represent scalar quantities.

But the scenario is not the mental model; the scenario simply provides concrete experiences that students use to develop their own mental model. The teacher should help students probe those mental models as they are being developed to uncover misconceptions and increase flexibility and robustness. If given a sequence of entrances and exits, how does a student figure out which band has more fans in the gymnasium? Does she always process sequentially or has she realized that it is more efficient to group the movement of the Negative fans and the Positive fans separately and then combine them in the end? Does another student recognize that, if all you care about is which band is ahead in terms of fans, three Negative fans entering the gymnasium has the same effect as three Positive fans leaving the gymnasium?

Thinking about how to guide students as they construct mental models is a big part of what we do at Vertical Learning Labs.

Wednesday, June 5, 2013

Google Glass: Always On

I read a bunch of tech sites and spend way too much perusing the comments. One of the hot topics of conversation lately is Google Glass. Some people have already declared it the NEXT BIG THING, while others have declared it a flop.

I won't really know what I think about Google Glass until it is out and I can try one for myself, but I do know that I hate carrying stuff. Last night, I attended a panel discussion in downtown Boston and decided to make a day of it. I parked outside of Alewife station, had a late lunch at Jasper White's Summer Shack (soft-shell crab BLT), rode the T into Park Street, saw Star Trek Into Darkness at the AMC Loews, and then met up with friends for the talk.

The day was warm, but I knew that it might get a little chilly by the time the talk let out at 8:30. I decided against bringing a coat with me because I didn't want to have to lug it around for six hours in case I needed it for fifteen minutes; I'd rather be cold. If the forecast had been for rain in the evening, that may have changed the equation a bit. A tote umbrella is less obtrusive than a coat, and being wet is worse than being cold, especially since I'd stay wet for the remaining T- and then car-ride home.

I tend to keep the things that I need to carry to a minimum. I wear glasses and a wristwatch, and carry a wallet, a smartphone, and a set of keys. I don't mind the wristwatch, wallet, smartphone, and keys because I generally don't notice them when I'm not using them. I mind the glasses, but I am using them all the time and couldn't really function without them.

So where does Google Glass fit into all of this? I've read that Glass has only three hours of battery life when the display is on and that it isn't foldable, so I can't put them away like a pair of sunglasses. This means that I am going to use Glass for a maximum of three hours a day while wearing them for twelve.

I don't believe that Glass will be successful in the market if users are only wearing them under special circumstances. For Glass to be successful, it has to be something that the user is prepared to wear every day while out and about. If Glass can disappear on me like my wristwatch does when I'm not looking at it for the time, then it makes sense. If I'm going to be noticing it the whole time I'm not using it, then it doesn't seem to make sense to me.

I won't really know what I think about Google Glass until it is out and I can try one for myself, but I do know that I hate carrying stuff. Last night, I attended a panel discussion in downtown Boston and decided to make a day of it. I parked outside of Alewife station, had a late lunch at Jasper White's Summer Shack (soft-shell crab BLT), rode the T into Park Street, saw Star Trek Into Darkness at the AMC Loews, and then met up with friends for the talk.

The day was warm, but I knew that it might get a little chilly by the time the talk let out at 8:30. I decided against bringing a coat with me because I didn't want to have to lug it around for six hours in case I needed it for fifteen minutes; I'd rather be cold. If the forecast had been for rain in the evening, that may have changed the equation a bit. A tote umbrella is less obtrusive than a coat, and being wet is worse than being cold, especially since I'd stay wet for the remaining T- and then car-ride home.

I tend to keep the things that I need to carry to a minimum. I wear glasses and a wristwatch, and carry a wallet, a smartphone, and a set of keys. I don't mind the wristwatch, wallet, smartphone, and keys because I generally don't notice them when I'm not using them. I mind the glasses, but I am using them all the time and couldn't really function without them.

So where does Google Glass fit into all of this? I've read that Glass has only three hours of battery life when the display is on and that it isn't foldable, so I can't put them away like a pair of sunglasses. This means that I am going to use Glass for a maximum of three hours a day while wearing them for twelve.

I don't believe that Glass will be successful in the market if users are only wearing them under special circumstances. For Glass to be successful, it has to be something that the user is prepared to wear every day while out and about. If Glass can disappear on me like my wristwatch does when I'm not looking at it for the time, then it makes sense. If I'm going to be noticing it the whole time I'm not using it, then it doesn't seem to make sense to me.

Hello World

Welcome to Building Blocks. My name is David Ng and I am the founder and Chief Learning Officer of Vertical Learning Labs.

This is a personal blog... a place where I can put down my thoughts while they are still in a semi-raw state. Some of these posts may evolve into lengthier and more formal pieces. Many of them will disappear into the huge sucking void that we call the internet. I'm writing mostly for myself, to organize my own thoughts, but I welcome comments and would enjoy interacting with a community of other thinkers and learners.

Right now I am committing to a minimum of three posts a week. This is the first. I'm curious to see how this little experiment will turn out.

This is a personal blog... a place where I can put down my thoughts while they are still in a semi-raw state. Some of these posts may evolve into lengthier and more formal pieces. Many of them will disappear into the huge sucking void that we call the internet. I'm writing mostly for myself, to organize my own thoughts, but I welcome comments and would enjoy interacting with a community of other thinkers and learners.

Right now I am committing to a minimum of three posts a week. This is the first. I'm curious to see how this little experiment will turn out.

Subscribe to:

Posts (Atom)